Open Land Use for Africa (OLU4Africa)

This is the first progress report of the team No. 3 of the Nairobi INSPIRE Hackathon 2019. The team is led by Dmitrii Kozuch.

You can watch the webinar recording of this team below.

In the first stage the work is more oriented on the technical development rather than contacts with the community. The work went into two parallel directions that will be described in this progress report. The ultimate goal of these two directions is to enable land use/cover classification and segmentation of satellite imagery.

The first direction is relying on creating classification models using Sentinel-2 imagery, OpenStreetMap database and Keras (with Tensorflow backend) library.

The first steps are relying in collecting training and validation data for different types of land cover/use.

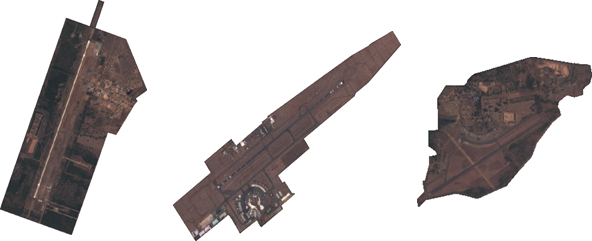

The example of data collection of aerodromes is following with single steps:

1) Download latest Kenya OSM database from the pages: http://download.geofabrik.de/africa.html

2) Import osm file into PostgreSQL database using osm2pgsql tool:

osm2pgsql --slim --username user_name --hstore –database database_name -H host_name kenya-latest.osm

3) Use script to download aerodromes images:

#Import SentinelAPI library (useful for getting metadata for Sentinel products)

from sentinelsat import SentinelAPI

api = SentinelAPI(user_name, user_password,'https://scihub.copernicus.eu/dhus')

import datetime

import requests

import shutil

import psycopg2

import psycopg2.extras

#Establish connection where osm2pgsql has saved latest Kenya OSM data

connection=psycopg2.connect("dbname=database_name user=user_name password=user_password host=host_name")

cursor=connection.cursor(cursor_factory=psycopg2.extras.DictCursor)

#Select osm_id, centroid and geometry of all aerodromes in OSM database

cursor.execute("select osm_id, st_astext(st_centroid(st_transform(way,4326))) as centroid, st_astext(st_flipcoordinates(st_transform(way,4326))) as geom from planet_osm_polygon where aeroway='aerodrome'")

aerodromes=cursor.fetchall()

connection.close()

folder='folder_to_save_images'

#For each aerodrome from Kenya OSM database

for aerodrome in aerodromes:

#Query all images from Sentinel-2 products in year 2018 that had cloudcoverage less then 5

products = api.query(aerodrome['centroid'], date=(datetime.date(2018, 1, 1),datetime.date(2019,1,1)), platformname='Sentinel-2', cloudcoverpercentage=(0, 5))

#From the queried products select the one with the minimum cloudcoverage

product=list(products.keys())[0]

min_value=products[product]['cloudcoverpercentage']

for p in products:

if products[p]['cloudcoverpercentage']<min_value:

product=p

min_value=products[p]['cloudcoverpercentage']

#Get timestamp when the selected product was collected

date=products[product]['beginposition'].strftime('%Y-%m-%dT%H:%M:%SZ')

#Create url link to download image from mundi WCS for the selected object for the selected date

url='http://shservices.mundiwebservices.com/ogc/wcs/d275ef59-3f26-4466-9a60-ff837e572144?version=2.0.0&SERVICE=WCS&REQUEST=GetCoverage&coverage=1_NATURAL_COL0R&FORMAT=GeoTIFF&SUBSET=time("%s")&GEOMETRY=%s&RESX=0.0001&RESY=0.0001&CRS=EPSG:4326' % (date,aerodrome['geom'])

#Download and save image

r=requests.get(url, stream=True)

if r.status_code == 200:

with open(folder+str(aerodrome['osm_id'])+'.tif', 'wb') as f:

shutil.copyfileobj(r.raw, f)

As a result it is 70 sample images that could be use for training and validation of airports and classification model. The model itself will be trained in Keras library using Convolution Neural Network algorithm. There were lots of similar intentions for various image classifications implemented in Keras. Here is an example to classify CIFAR-10 dataset – https://keras.io/examples/cifar10_cnn/ . The dataset itself is composed of 6 000 images for each following class: airplanes, cars, birds, cats, deer, dogs, frogs, horses, ships, and trucks.

Obviously except the problem with classification we need to tackle problem with image segmentation. So far it wasn‘t time to research existing algorithms for this.

The second

parallel way that it is worked on is to use eo-learn library and Africover

dataset (http://www.un-spider.org/links-and-resources/data-sources/land-cover-kenya-africover-fao

) for the land cover classification. The tutorial doing this is found on the

official library web page:

https://eo-learn.readthedocs.io/en/latest/examples/land-cover-map/SI_LULC_pipeline.html

It has been attempted to use the code provided, however there are some error emerging here and there. Thus there is a need to debug the code. The eo-learn library is quite young and thus it is natural to expect some bugs. All in all priority will be given to the first way because it has been tested many times so far and generally could give accuracy of classification higher than 70% . The only thing is that sometimes it could be too few samples to train and validate models so it will be need to download samples from the other countries in Africa.